The title of this blog is "On Languages ... etc etc ... Systems and Proceses", but utill now I never said anything about processes (or did you think I meant UNIX processes?) so let us write something about the latter now. As lately everybody seems to be happy with Agile/XP (like here and here), let's rant about that a little. So beware, it's (at least partially) a #RANT#, so don't try to kill or evangelize me!

1. The Gripes

The one single thing you encounter today in the process land is the Agile. Well, I have a few gripes with that, which I should have wrote up earlier (and I wanted to, really, for the last 3 years or so), but now the time has come. On the other side, now when Agile is mainstream, maybe my points aren't valid anymore? You know, "the normative force of the factual"* :(. Maybe, but let us begin from the start of all that:

As I read the "Agile Manifesto" and the XP explanations some 10 years ago or so, I was just amazed. It was the liberation! And what I particularly liked were things like pair programming, test driven design, and iterative deleopment. But today I must realize that I was somehow mistaken - maybe did I get it all wrong? Somehow it's all different from what I imagined it'd be, and I don't like it very much. Two examples:

a) the pair programming

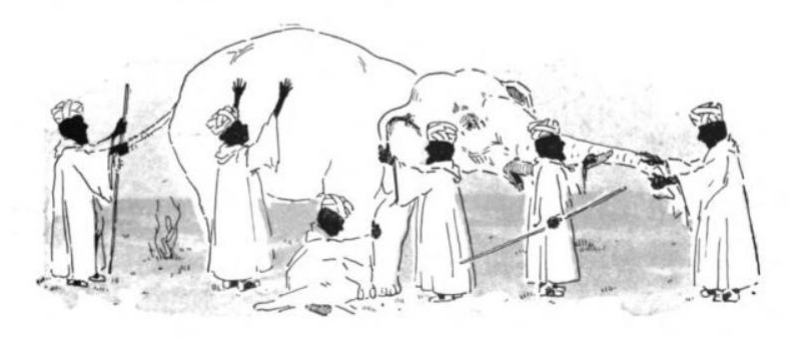

In my eyes it was all about two people caring about a piece of code, discussing its design, reviewing main rewrites, just because discussing something with another person can show you another perspective on the problem.b) test driven development/design (TDD)

And now? The reality? It is crazy, it's about keyboard swapping, and two people doing coding at the same time. For 15 minutes it's my keyboard, and then it's yours. Come on, are we in Henry Ford's car factory? Or were we supposed to be doing some creative work? #RANT#

But there are people who actually like that (here, here) aren't they? I'd say this could work, but only if you are paired up with someone on your level. Not just another beginner guy with a big ego, thank you very much! Even then I find it to be an overkill, 2 people doing work of 1? Aren't there people able to be doing decent work by themselves anymore? #RANT#

I liked this idea very much. OK, I've only seldom written the tests before code myself, but there always have been tests for a new feature: either before I coded it or after that. Because really, that doesn't matter if you know what a feature you are going to implement.

But now? The reality? You should always write tests first. Or you are not agile. And why that? It comes even better - TDD can mean test driven design! A good example of this was to find in a blog posting** of the venerable Uncle Bob where he develops a prime factorization algorithm by TDD, i.e. by writing a series of increasingly complicated tests and reworking the algorithm accordingly. That looks for me like crazy, groping in the dark for the right solution, excuse me?! #RANT#

Why should we waste time and rework our solution on and on? The received answer is: because it results in decoupled code. Come on, aren't there other ways to write decoupled code? Like old and unfashionable thinking about its design a little? #RANT#

2. Some Summary and More Gripes

So meanwhile the XP is for me not the freedom, bur rather the next horsewhip of the pointy haired boss types. It's more about following the rules and their religious observance than about anything else. As I read about XP/Agile first, I thought it was targeted at proficient developers, you know, at the responsible adults, which should be allowed to be adult and just do their job! But now I have more and more the impression that it's rather targeted at beginners. Obviously, they need clear rules and directions. So maybe it's about the overall prevalence (and importance) of beginners in our industry?

Well, mabe (just maybe) I'm opposing the abovementioned XP principles because they introduce a process-like quality into XP, and I feel kind of betrayed now? It was supposed to be about freedom and interacting free individuals? Maybe it was just an illusion, maybe the recipe of Agile Manifesto was too simple?

In this this excellent post about "post agile" development Gwaredd Mountain states that no methodology is pure "agile" or pure "process-oriented". Rather, each one of them contains "freedom" (agile) and "process" elements, as a mix of both is needed for the real world. So even the supposedly totally free XP has to have some emergency brakes. Well, so much for my illusions of a better world :(. Nonetheless, the emergency brakes are rather expensive, the double pair of eyes to watch over "Brownian motion" like TDD development? That's my private opinion, but arguably it's somehow taking things to the extreme.

But there's 3rd problem, and it's not easily dismissed:

c) evangelism

In the Agile world-view there's either agile or waterfall, stone age, and nuclear winter. A typical Twitter post says:

Agile change is change. Change is hard for peoplethus, if you can't get enthusiastic about Agile it means that you are unreformable, and just can't see the light! I think the Agile trainer guild is responsible for that***. As their training courses target mainly the beginners, they must give them rules to be followed in each circumstances. But I cannot understand why this must be promulgated to the general public? You know, "Only a Sith deals in absolutes", it's not a sign of wisdom do divide the world in the only right way and all the others! #RANT#

Ok guys, you must earn a living, but doesn't engineering decency mean anything to you? Or can't you think for yourselfs and would rather become a follower? Silver bullet anyone? That irritates me, but at the same timee it's interesting - what could possibly be a result of a realy intensive full-night talk with an Agile trainer? #RANT#

It's a little known fact, but Dr. Royce's defining paper for the waterfall process already contained iterations (see figures 3 and 4)! Agile is not that original, people were able to think and use common sense before that! #RANT#

3. So What Do I Think Myself?

You may ask, how should we develop software then? We're not supposed to go back to "cowboy coding" are we? Steve Yegge' post describes an alternative: the pretty informal Google development practices at that time and praises it as the solution. Well I must admit it does sound good, but then even Google wanted to introduce some process not a long time after that (Shh, we are adding a process). In that article they describe how the Scrum process was introduced in the AdSense project (if I'm not mistaken), however not a full embodiment of Scrum. But it seems that somehow for time critical, heavy-money projects you need some kind of a whip in the end... :(

So what do we need a process for? Do we need it at all?

First let me quote Aristotle (via "Pragamtic Programmers" link

The 2nd insight comes from the field of avalanche avoidance (because I'm somehow of an offpiste skier). What the professionals always do, is to have a checklist in the car and to go through it every time they set off. Because people forget, people do make mistakes, and in that profession, when they do, it can cost them their life. That's a kind of process too - you won't believe that you could do a mistake, but in the grand scheme of things, you probably will. So you need some annoying routine, even if you don't think so!

The 2nd insight comes from the field of avalanche avoidance (because I'm somehow of an offpiste skier). What the professionals always do, is to have a checklist in the car and to go through it every time they set off. Because people forget, people do make mistakes, and in that profession, when they do, it can cost them their life. That's a kind of process too - you won't believe that you could do a mistake, but in the grand scheme of things, you probably will. So you need some annoying routine, even if you don't think so!So what would I take? A methodology I liked rather well was the "Tracer Bullet Development" from the abovementioned "Pragmatic Programmers" book. It's simple, it has a lot of common sense (what I seem to like more then religious fervor) and it goes along the lines of my own understanding of the agile development which I came to during the last 10 or so years.

Because I'm not an enemy of agile. More than taht, I think it's somehow a necessity! In real life there's constant change, and the original design**** of a system cannot be sustained - either it will be dying or it must adapt! So for me, agile has got it right in its basic proposition - you must always iterate. As the ancients used to say "iterare necesse est, vivere non est necesse" :).

And what about my Agile/XP gripes? Here I'm with Steve Yegge - there's "Agile" and "agile", Agile of the trainers and pointy-haired bosses, and agile of the pragmatics. And I'm with the latter.

Waiting for your comments.

---

* or "die normative Kraft des Faktischen", by Immanuel Kant. This means: all that is, is good, because it is (i.e. exists) or something like that.

** sorry, the original blogpost I read is vanished, this one is the current version reworked as a programming kata:

http://butunclebob.com/ArticleS.UncleBob.ThePrimeFactorsKata

*** Read Steve Yegge's post about the so called "Agile" experts: "Good Agile, Bad Agile ", http://steve-yegge.blogspot.com/2006/09/good-agile-bad-agile_27.html, or this one: "Agile Ruined My Life", http://www.whattofix.com/blog/archives/2010/09/agile-ruined-my.php and look out for the Fraud markers!

**** For example, take this fascinating and/or terryfying case of dying architecture (from the "Refactoring in Large Software Projects" book

Alas, everything started out so well! The concepts were clear-cut. The technical prototype was a success, the customer was thrilled and Gartner Group decided that the architecture was flawless. Then real life began to take its toll: the number of developers went up, the average qualification dropped, the architect always had other things on his plate – and time pressure increased.

The consequence was that the architecture concepts were executed less and less consistently. Dependencies were in a tangle, performance dropped (too many client/server hops and too many single database queries), and originally small modifications turned into huge catastrophes. To a certain extent the architecture concepts were circumvented on purpose. For example, classes were instantiated via Reflection because the class was not accessible at compile-time.