This is a

guest post which was written by Victor Zverovich* (aka

@vzverovich) for

C++ Stories and which I liked so much (not least because of the funny

S. Lem references 😀

whose big fan I used to be in my time!) that I asked for permission to repost it here. And so, you can read it here including new, fresh pictures from Stanislaw Lem's works.

My take on std::format:

- you can say what you want about C++20, but it allowed to solve the problems of both iostreams and printf by reusing their strenghts and transcending their limitations!

>>>> the original text starts here >>>>

Intro

(with apologies to Stanisław Lem)

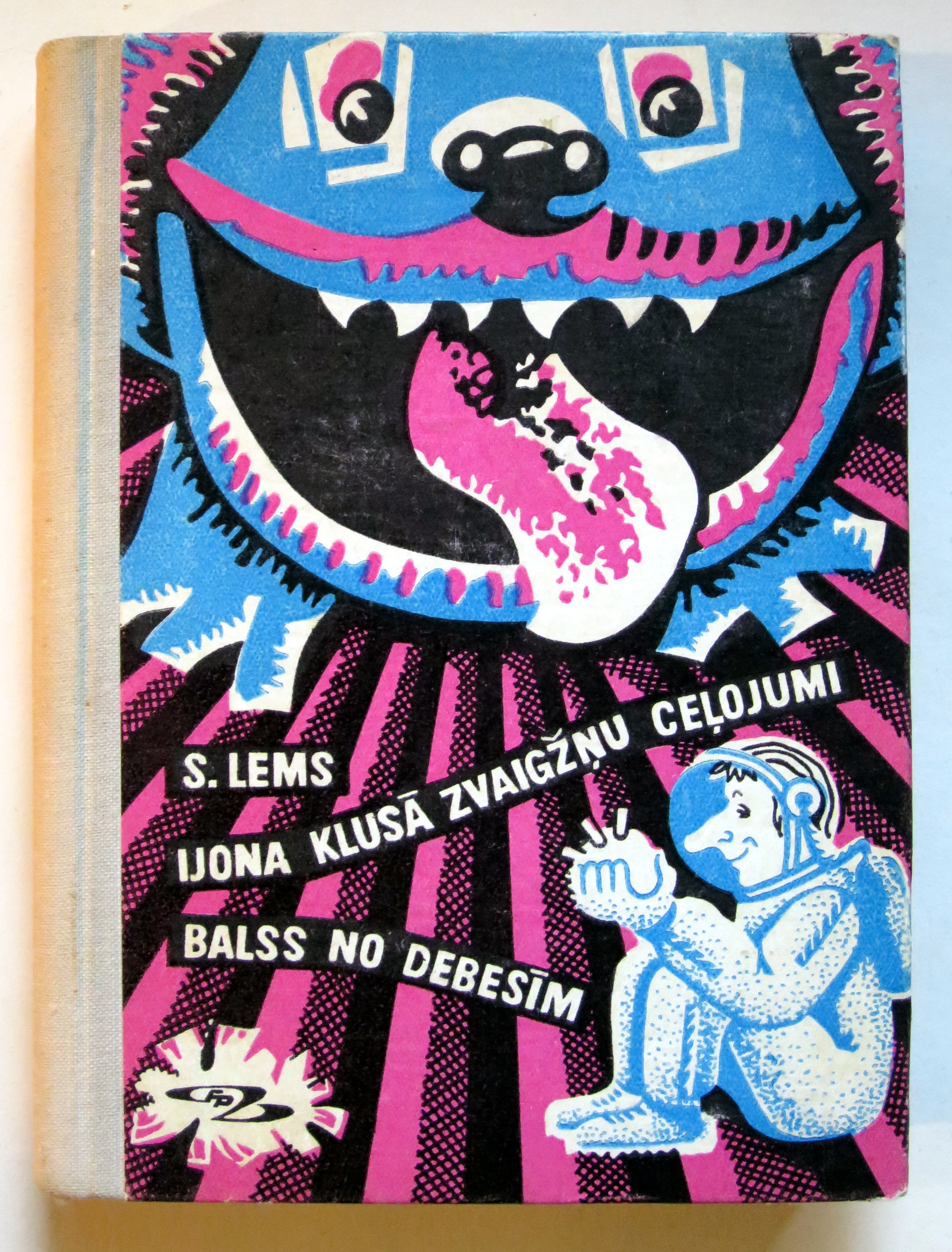

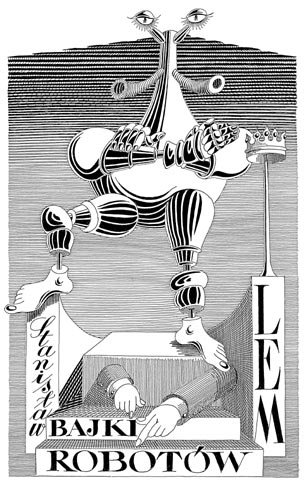

|

| Star Diaries, Latvian edition |

Consider the following use case: you are developing the Enteropia

[2]-first Sepulka

[3]-as-a-Service (SaaS) platform and have server code written in C++ that checks the value of requested sepulka’s squishiness received over the wire and, if the value is invalid, logs it and returns an error to the client. Squishiness is passed as a single byte and you want to format it as a 2-digit hexadecimal integer, because that is, of course, the Ardrite

[1] National Standards Institute (ANSI) standard representation of squishiness. You decide to

try different formatting facilities provided by C++ and decide which one to use for logging.

First you try

iostreams:

#include <cstdint>

#include <iomanip>

#include <iostream>

void log_error(std::ostream& log, std::uint_least8_t squishiness) {

log << "Invalid squishiness: "

<< std::setfill('0') << std::setw(2) << std::hex

<< squishiness << "\n";

}

The code is a bit verbose, isn’t it? You also need to pull in an additional header, <

iomanip>, to do even basic formatting. But that’s not a big deal.

|

| Ardrites testing |

However, when you try to test this code (inhabitants of Enteropia have an unusual tradition of testing their logging code), you find out that the code doesn’t do what you want. For example,

log_error(std::cout, 10);

prints

Invalid squishiness: 0

Which is surprising for two reasons: first it prints one character instead of two and second the printed

value is wrong. After a bit of debugging, you figure out that

iostreams treat the value as a character on your platform and that the extra newline in your log is not a coincidence. An even worse scenario is that it works on your system, but not on the one of your most beloved customers.

So you add a cast to fix this which makes the code even more verbose (see the code

@Compiler Explorer)

log << "Invalid squishiness: "

<< std::setfill('0') << std::setw(2) << std::hex

<< static_cast<unsigned>(squishiness) << "\n";

Can the Ardrites do better than that?

Yes, they can.

Format strings

Surprisingly, the answer comes from the ancient 1960s (Gregorian calendar) Earth technology, format strings (in a way, this is similar to the story of coroutines). C++ had this technology all along in the form of the printf family of functions and later rediscovered in std::put_time.

|

Some current Ardrite technology

|

What makes format strings so useful is expressiveness. With a very simple mini-language, you can easily express complex formatting requirements. To illustrate this, let’s rewrite the example above using printf:

#include <cstdint>

#include <cstdio>

void log_error(std::FILE* log, std::uint_least8_t squishiness) {

std::fprintf(log, "Invalid squishiness: %02x\n", squishiness);

}

Isn’t it beautiful in its

simplicity? Even if you’ve somehow never seen

printf in your life, you can learn

the syntax in no time. In contrast, can you always remember which

iostreams manipulator to use? Is it

std::fill or

std::setfill? Why

std::setw and

std::setprecision and not, say,

std::setwidth or

std::setp?

A lesser-known advantage of printf is atomicity. A format string and arguments are passed to a formatting function in a single call which makes it easier to write them atomically without having an interleaved output in the case of writing from multiple threads.

In contrast, with iostreams, each argument and parts of the message are fed into formatting functions separately, which makes synchronization harder. This problem was only addressed in C++20 with the introduction of an additional layer of std::basic_osyncstream.**

However, the C printf comes with its set of problems which iostreams addressed:

- Safety: C varargs are inherently unsafe, and it is a user’s responsibility to make sure that the type of information is carefully encoded in the format strings. Some compilers issue a warning if the format specification doesn’t match argument types, but only for literal strings. Without extra care, this ability is often lost when wrapping printf in another API layer such as logging. Compilers can also lie to you in these warnings.

- Extensibility: you cannot format objects of user-defined types with printf.

With the introduction of variadic templates and

constexpr in C++11, it has become possible

to combine the advantages of

printf and

iostreams. This has finally been done in

C++20 formatting facility based a popular open-source formatting library called

{fmt}.

The C++20 formatting library

Let’s implement the same logging example using C++20

std::format:

#include <cstdint>

#include <format>

#include <iostream>

void log_error(std::ostream& log, std::uint_least8_t squishiness) {

log << std::format("Invalid squishiness: {:02x}\n", squishiness);

}

As you can see, the formatting code is similar to that of

printf with a notable difference being

{} used as delimiters instead of

%. This allows us and the parser to find format specification boundaries easily and is particularly essential for more sophisticated formatting (e.g. formatting of date and time).

Unlike standard

printf,

std::format supports positional arguments i.e. referring to an argument by its index separated from format specifiers by the ':' character:

log << std::format("Invalid squishiness: {0:02x}\n", squishiness);

Positional arguments allow using the same argument multiple times.

Otherwise, the format syntax of std::format which is largely borrowed from Python is very similar to printf’s. In this case format specifications are identical (02x) and have the same semantics, namely, format a 2-digit integer in hexadecimal with zero padding.

But because

std::format is based on variadic templates instead of C

varargs and is fully type-aware (and type-safe), it simplifies the syntax even further by getting rid of all the numerous

printf specifiers that only exist to convey the type information. The

printf example from earlier

is in fact incorrect exhibiting an undefined behaviour. Strictly speaking, it should have been

#include <cinttypes> // for PRIxLEAST8

#include <cstdint>

#include <cstdio>

void log_error(std::FILE* log, std::uint_least8_t squishiness) {

std::fprintf(log, "Invalid squishiness: %02" PRIxLEAST8 "\n",

squishiness);

}

Which doesn’t look as appealing. More importantly, the use of macros is considered inappropriate

in a civilized Ardrite society.

|

What's in vogue in the Ardrite society?

|

Here is a (possibly incomplete) list of specifiers made obsolete:

hh, h, l, ll, L, z, j, t, I, I32, I64, q, as well as

a zoo of 84 macros:

Prefix intx_t int_leastx_t int_fastx_t intmax_t intptr_t

--------------------------------------------------------------------

d PRIdx PRIdLEASTx PRIdFASTx PRIdMAX PRIdPTR

i PRIix PRIiLEASTx PRIiFASTx PRIiMAX PRIiPTR

u PRIux PRIuLEASTx PRIuFASTx PRIuMAX PRIuPTR

o PRIox PRIoLEASTx PRIoFASTx PRIoMAX PRIoPTR

x PRIxx PRIxLEASTx PRIxFASTx PRIxMAX PRIxPTR

X PRIXx PRIXLEASTx PRIXFASTx PRIXMAX PRIXPTR

In fact, even

x in the

std::format example is not an integer type specifier, but a hexadecimal format specifier, because the information that the argument is an integer is preserved. This allows omitting all format specifiers altogether to get the default (decimal for integers) formatting:

log << std::format("Invalid squishiness: {}\n", squishiness);

User-defined types

Following a popular trend in the Ardrite software development community, you decide to switch all your code from std::uint_least8_t to something stronger-typed and introduced the squishiness type:

enum class squishiness : std::uint_least8_t {};

Also, you decide that you always want to use ANSI-standard formatting of

squishiness which will hopefully allow you to hide all the ugliness in

operator<<:

std::ostream& operator<<(std::ostream& os, squishiness s) {

return os << std::setfill('0') << std::setw(2) << std::hex

<< static_cast<unsigned>(s);

}

Now your logging function looks much simpler:

void log_error(std::ostream& log, squishiness s) {

log << "Invalid squishiness: " << s << "\n";

}

Then you decide to

add another important piece of information, sepulka security number (SSN) to the log, although you are afraid it might not pass the review because of privacy concerns:

void log_error(std::ostream& log, squishiness s, unsigned ssn) {

log << "Invalid squishiness: " << s << ", ssn=" << ssn << "\n";

}

To your surprise, SSN values in the log are wrong, for example:

log_error(std::cout, squishiness(0x42), 12345);

gives

Invalid squishiness: 42, ssn=3039

After another debugging session, you realize that the

std::hex flag is sticky, and SSN ends up being formatted in hexadecimal. So you have to change your overloaded

operator<< to

std::ostream& operator<<(std::ostream& os, squishiness s) {

std::ios_base::fmtflags f(os.flags());

os << std::setfill('0') << std::setw(2) << std::hex

<< static_cast<unsigned>(s);

os.flags(f); // restore state!

return os;

}

A pretty complicated piece of code just to print out an SSN in decimal format.

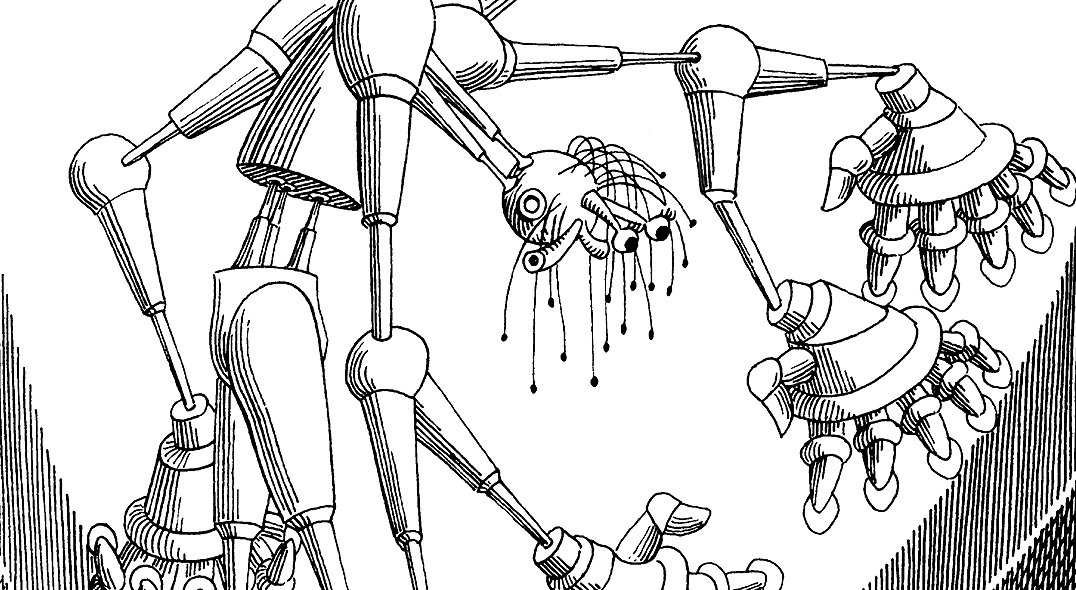

|

| A complicated user type waiting to be printed |

std::format follows a more functional approach and doesn’t share formatting state between the calls. This makes reasoning about formatting easier and brings performance benefits because you don’t need to save/check/restore state all the time.

To make

squishiness objects formattable you just need to specialize the formatter template and you can reuse existing formatters:

#include <format>

#include <ostream>

template <>

struct std::formatter<squishiness> : std::formatter<unsigned> {

auto format(squishiness s, format_context& ctx) {

return format_to(ctx.out(), "{:02x}", static_cast<unsigned>(s));

}

};

void log_error(std::ostream& log, squishiness s, unsigned ssn) {

log << std::format("Invalid squishiness: {}, ssn={}\n", s, ssn);

}

You can see the message

"Invalid squishiness: {}, ssn={}\n" as a whole, not interleaved with

<<, which is more readable and less error-prone.

Custom formatting functions

Now you decide that you don’t want to log everything to a stream but use your system’s logging API instead. All your servers run the popular on Enteropia GNU/systemd operating system where GNU stands for GNU’s, not Ubuntu, so you implement logging via its journal API. Unfortunately, the journal API is very user-unfriendly and unsafe. So you end up wrapping it in a type-safe layer and making it more generic:

#include <systemd/sd-journal.h>

#include <format> // no need for <ostream> anymore

void vlog_error(std::string_view format_str, std::format_args args) {

sd_journal_send("MESSAGE=%s", std::vformat(format_str, args).c_str(),

"PRIORITY=%i", LOG_ERR, nullptr);

}

template <typename... Args>

inline void log_error(std::string_view format_str,

const Args&... args) {

vlog_error(format_str, std::make_format_args(args...));

}

Now you can use

log_error as any other formatting function and it will log to the system journal:

log_error("Invalid squishiness: {}, ssn={}\n",

squishiness(0x42), 12345);

The reason why we don’t directly call

sd_journal_send in

log_error, but rather have the intermediary

vlog_error is to make

vlog_error a normal function rather than a template and

avoiding instantiations for all the combinations of argument types passed to it***. This dramatically reduces binary code size.

log_error is a template but because it is inlined and doesn’t do anything other than capturing the arguments, it doesn’t add much to the code size either.

|

| Ardrite custom formatter machine |

The

std::vformat function performs the actual formatting and returns the result as a string which you then pass to sd_journal_send. You can avoid string construction with

std::vformat_to which writes to an output iterator, but this code is not performance critical, so you decide to leave it as is.

Date and time formatting

Finally you decide to log how long a request took and find out that std::format makes it super easy too:

void log_request_duration(std::ostream& log,

std::chrono::milliseconds ms) {

log << std::format("Processed request in {}.", ms);

}

This writes both the duration and its time units, for example:

Processed request in 42ms.

std::format supports formatting not just durations but all

chrono date and time types via expressive

format specifications based on

strftime, for example:

std::format("Logged at {:%F %T} UTC.",

std::chrono::system_clock::now());Beyond std::format

In the process of developing your SaaS system you’ve learnt about the features of C++20 std::format,

namely format strings, positional arguments, date and time formatting, extensibility for user-defined types as well as different output targets and statelessness, and how they compare to the earlier formatting facilities.

|

| Hello Earthlings! |

Note to Earthlings: your standard libraries may not yet implement C++20

std::format but

don’t panic 🚀: all of these features and much more are available in the

open-source {fmt} library. Some additional features include:

- formatted I/O

high-performance

- floating-point formatting

- compile-time format string checks

- better Unicode support

- text colors and styles

- named arguments

All of the examples will work in {fmt} with minimal changes, mostly replacing std::format with fmt::format and <format> with <fmt/core.h> or other relevant include.

More about std::format

If you like to read more about std::format here are some good resources:

Glossary

[1] Ardrites – intelligent beings, polydiaphanohedral, nonbisymmetrical and pelissobrachial, belonging to the genus Siliconoidea, order Polytheria, class Luminifera.

[2] Enteropia – 6th planet of a double (red and blue) star in the Calf constellation

[3] Sepulka – pl: sepulki, a prominent element of the civilization of Ardrites from the planet of Enteropia; see “Sepulkaria”

[4] Sepulkaria – sing: sepulkarium, establishments used for sepuling; see “Sepuling”

[5] Sepuling – an activity of Ardrites from the planet of Enteropia; see “Sepulka”

The picture and references come from the book

Star Diaries by Stanislaw Lem.

>>>> and here ends the original text... >>>>

C++23 improvements

(Added by Bartlomiej Filipek, aka

Bartek 🙂):

std::format doesn’t stop with C++20. The ISO Committee and C++ experts have a bunch of additions to this powerful library component. Here’s a quick overview of changes that we’ll get****:

- P2216R3: std::format improvements - improving safety via compile-time format string checks and also reducing the binary size of format_to. This is implemented as Defect Report against C++20, so compiler vendors can implement it earlier than the official C++23 Standard will be approved!

- P2093 Formatted output - a better, safer and faster way to output text!

std::print("Hello, {}!", name);.

- possibly in C++23: P2286 Formatting Ranges - this will add formatters for ranges, tuples and pairs.

As you can see, a lot is going on in this area!

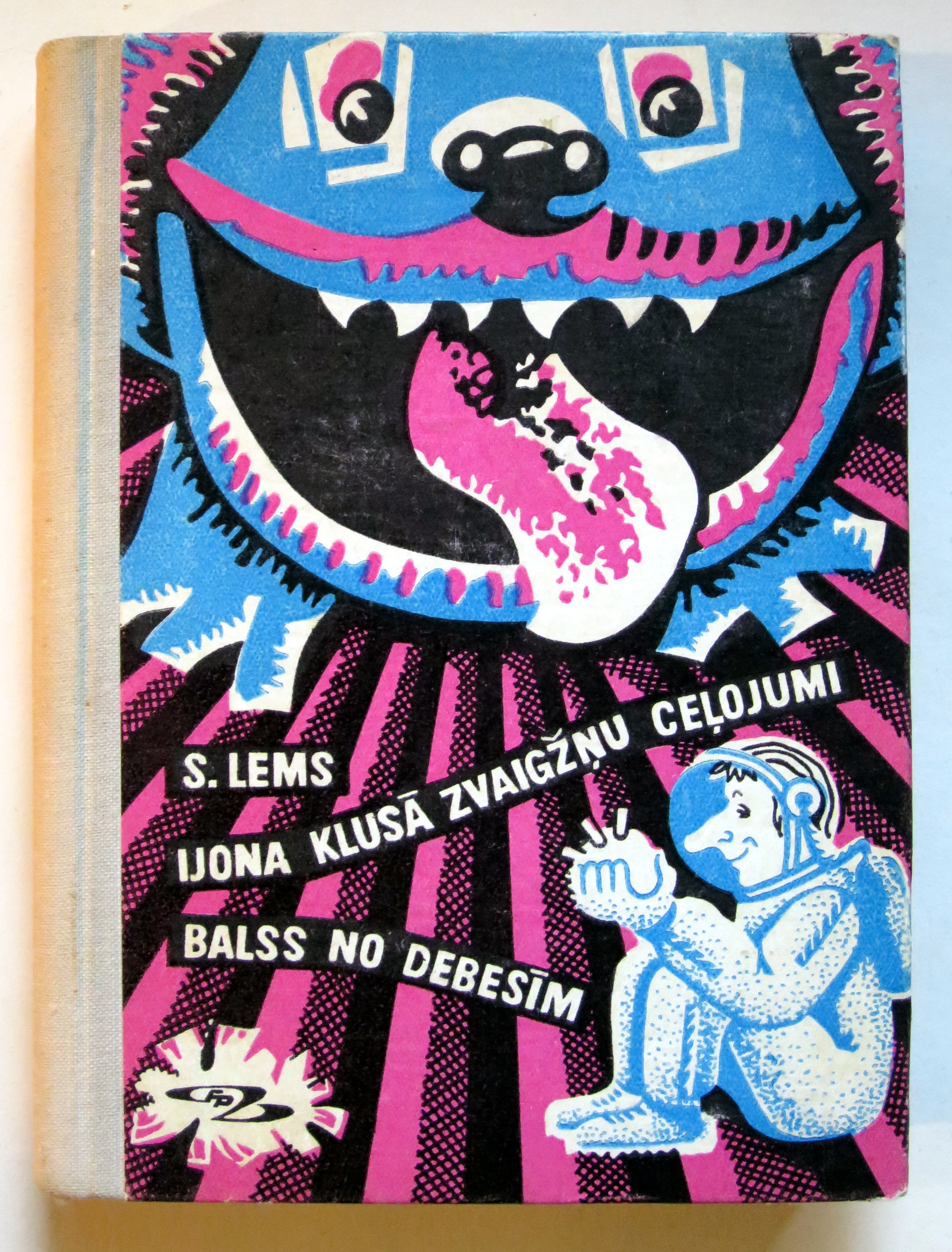

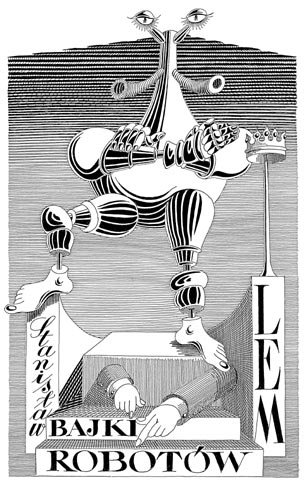

Artworks

Victor* originally wrote that guest blog post for

Fluent C++, then this one on

C++ Stories, updated with information about C++20. I just reposted that later version, but also added some of

Daniel Mróz's iconic illustrations for S. Lem's

Cyberiad and

Fables of Robots books.

|

| Fables of Robots in original |

|

| The Cyberiad in German |

|

Fables of Robots:

the new illustrations

for an Estonian edition |

I know, I know... - these aren't about sepuling, sepulkaria and Ardrites, but the Ion Tichy stories didn't have illustrations as I read them. On the other side, the works of

Daniel Mróz are simply out of this world

🚀! Thus I took a littlte bit of

licentia poetica and misused robot images to represent Ardrites and their life. I hope you won't be mad with me because it conveys a kind of sci-fi feeling this guest post needed.

Now, this is the end, at last!

PS: I found the illustrations on internet, so should the links disappear, please let me know!!!

--

*

Victor Zverovich is a software engineer at Facebook working on the

Thrift RPC framework and the author of the popular

{fmt} library, a subset of which is proposed into C++20 as a new formatting facility. He is passionate about open-source software, designing good APIs, and science fiction.You can find Victor online on

Twitter,

StackOverflow, and

GitHub.

** C++20

std::osyncstream will:

"std::osyncstream(std::cout) << "Hello, " << "World!" << '\n';

...provide the guarantee that all output made to the same final destination buffer (std::cout in the examples above) will be free of data races and will not be interleaved or garbled in any way, as long as every write to the that final destination buffer is made through (possibly different) instances of std::basic_osyncstream."

See,

I learned something new!!! Reading blogposts is helpful!

*** As stated in

P0645R10:

"Exposing the type-erased API rather than making it an implementation detail and only providing variadic functions allows applying the same technique to the user code." 👍

**** pending C++23 format improvements:

- P2216(R3): checking of format strings in compile-time (metaprogramming FTW!)

- P2093(R1):

a) using std::print(...) instead of std::cout << std::format(...) - because it:

"avoids allocating a temporary std::string object and calling operator<< which performs formatted I/O on text that is already formatted. The number of function calls is reduced to one which, together with std::vformat-like type erasure, results in much smaller binary code" !!!

b) support for Unicode strings, like: std::print("Привет, κόσμος!") <=> printf("Hello world!")

All in all, this will add the open points Victor complained about it his post here:

std::format in C++20!